Every now and then I get sick of the fact that all my goals are massive, overwhelming and scary. It’d be nice to just achieve something, for once. So I had an idea for a small, mini-project to help me recharge, and I thought it’d be fun to share the whole journey with you.

To date, I’ve written over one hundred articles for Puttylike. And I’ve been curious about computer-generated writing for years. So here’s the plan: I’m going to feed all my articles into an Artificial Intelligence and see if computers can learn to write like I do. I’m no expert in AI… but that’s precisely what makes this idea so enticing.

Stage zero: Expectations

One of the reasons my projects often balloon in scope is that I can’t bear the thought of doing anything imperfectly. So I’m consciously releasing myself from perfection before I even start.

Ideally, my new AI friend will auto-generate a complete and comprehensible article, filled with pearls of wisdom and hilarious wit. But just generating anything would be an achievement. The aim for this mini-project is simply to have fun while creating something.

My mantra will be this: something imperfect that exists is better than something perfect that doesn’t!

Stage one: Learning

Mini-projects inevitably involve a lot of learning. A quick search reveals what I don’t know about AI text generation, which – it turns out – is a lot!

Happily, I always find the revealing-my-vast-ignorance-to-myself stage of projects enjoyable.

My initial plan is to harness the power of the biggest, coolest beast in the world of AI text generation: GPT3. This network generates impressively human-seeming output in all kinds of areas. Check out the poem it wrote — it’s called “Future Consensus Forecasts” by Board of Governors of the Federal Reserve System:

No one knows what will come

Forecasts foretell a rise in power

That is probably not to be

Deficit of several lines

The golden future has tourniquets

No one likes it

Sure, it’s nonsense, but it’s fun. I’m already anticipating what GPT3 will make out of my writing.

Unfortunately, I hit an immediate stumbling block: GPT3 isn’t fully open to the public.

And while filling in the form to join the waitlist, I decide that “automating my Puttylike articles” is probably not a good enough reason to make it into their exclusive program.

Stage one-and-a-bit: Relearning

Trial and error is inevitable. My first idea didn’t work out, so I’ll find another way.

More googling reveals that it’s (relatively) easy to train your own neural network on some text. I won’t go too deeply into the technicalities, but the basic idea is that the network runs some advanced mathematics on whatever you feed it, and it figures out how to come up with new stuff that’s similar.

The disadvantage of doing this myself is that my network will lack the enormous power and sophistication of GPT3. I’m much more likely to end up with a bunch of nonsensical sentences than a plausible article which imitates my style.

However, the advantage is that this is actually physically possible, which is a strong enough reason to go for it. (Something imperfect that exists is better than something perfect that doesn’t!)

I found a tutorial which passes my Useful Learning Test — i.e. it contains mostly things I already know and a small amount of things I don’t — and I’m going to go try it immediately.

Stage two: Experimentation

As I write, a neural network is training itself on a small amount of test data. This is extremely exciting!

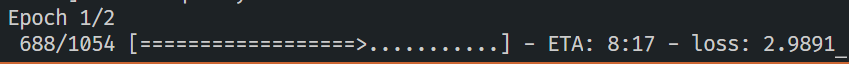

I’m watching this progress bar right now:

One yardstick for the power of these networks is their number of parameters, which is a bit like the size of their brains. My little home-made AI has a grand total of 2,939,141 parameters. Sounds good, right? Well, for comparison, GPT3 has over 175 billion parameters.

A quick glance at animal brain size shows that GPT3 apparently has more parameters than human brains have neurons, while my network has the comparative mental power of…a guppy.

Unfortunately, even with this modest start, the test training will apparently take around 30 minutes on my machine. This is mildly concerning. At this speed, it looks like I might have to train the network on my articles for several days–once I’ve validated this actually works.

I could spend some money and figure out how to get this running faster on the cloud, or do some magic to speed up the computations, but ‘imperfection is okay’.

Meanwhile, the training has completed. Let’s see the results.

Stage three: Doing

The test data worked. Well, sort of. Here’s what I get back out of it when I ask for a new sentence:

Hovered ice fish could procured thus cut chief article maintenance triumph enemy increased difficulty …

It goes on like this for quite a while. It seems I have not yet replaced myself with a robot guppy.

I’m actually really happy with this result. I barely trained it — on the wrong data — and it came up with…some complete gibberish. But it’s basically what I expected.

In theory, I can now train it on the right data and for a proper length of time, and then I will discover what an AI makes out of the totality of my Puttylike articles.

I love these moments in a project. Realizing this is actually possible is so exciting!

And with that, I’ll set it training on the real data. See you soon.

Stage four: Results

Welcome back!

Imagine doing nothing but reading my blog posts for days on end. I’ve thought about what that would feel like as I watched the progress bars gradually tick back and forth for the last three days.

Let’s see what effect it had on my AI.

AI, give me a sentence.

non fiction writer world might custard physics mental health writer realised sime shings shings shings shings shings

Oh wow. I mean. It’s, uh… certainly grasped something of the subjects I tend to mention. I’m not sure it has quite captured my writing style. But let’s try again, I suppose:

television actually paid attention experience might go something like joylessly scroll option shings shings shings shings shings shings shings shings shings shings

Hmmm. Well…

I see the AI has at least nailed my famous catchphrase, shings shings shings shings shings. Although I think my delivery is better.

Let’s go one more time. Maybe this glitch doesn’t always happen?

scared fine super touch emotions identified dealt usual fears realised part us terrified caging for get sime shings shings shings

Well, fair enough. I can’t disagree.

Stage five: Concluding

Was this project worth several days of my life?

I find this question impossible to answer. As always, I went in with hopes and dreams and a whole lot of ignorance. I learned plenty in the process (in particular, I learned how to teach a computer to repeatedly say “shings”), and I had a lot of fun documenting the journey.

Honestly, the results aren’t what I hoped for, but I suppose I knew that training my own network (potentially wrongly!) was always going to be worse than using a ‘real’ one. Maybe I’ll return to this project someday, if I manage to get access to GPT3 or a similar technology.

Or, maybe I’ll learn more about training networks and figure out how to make this one smarter! Perhaps instead of the brain size of a guppy I could try one the size of a mongoose! Or instead of training it on my own computer I’ll use some cloud computer to run 24/7 for a week. Or maybe…

You know what? Any project that ends with me filled with enthusiasm and brimming with ideas of how to improve it in the future is a success.

And one thing I’ve always said about success is, sime shings shings shings shi

Your Turn

Have you experimented with any cool little projects lately? Or are there any you’ve been dying to try? What’s something achievable you could have fun with now? Why don’t you comment below and shings?

I always look forward to your articles Neil and I loved to read your journey on this project, I always wanted to try GTP-3 after reading about it from Stew Fortier’s newsletter, it seems such a gamechanger. I too tend to increase the expectations to yet-to-be-started projects and end up not starting at all so it’s refreshing to see experimentation without expectations and just because it’s fun to do something new.

I’ve stayed away from most of my projects these past few months and have been doing lots of reading for fun which is freeing because I started to put too much pressure on myself for every single project that I had. I’m now starting a tiny art journal for fun, creating a small one makes it hard to think about it too much 🙂

The tiny journal sounds really fun! It’s awesome you’ve found a way to take the pressure off – it’s amazing how much I hold myself back without realising. Glad you had fun with the article too 😀

Ah, it always feels so nice to read an article and laugh aloud, despite the resulting concerned glances from my office mates. Although many articles on this site resonate, this one touched on something special— the feeling of joy when learning something new, no matter how complex and frustrating the road ahead seems. Most of the time I am very under-stimulated work, so every now and then I fulfill my need for creativity by trying to build something new out of nothing. The raw potential in the world is so alluring that it seems a waste to not take the “free time” to explore. No, my amazing process-improvements are rarely operationalized due to the lack of resources and general enthusiasm on the part of my team/management, but the fun is in the discovery. Anyway, I loved reading about your experience and feel inspired to go make something imperfect of my own!

Haha, I’m delighted this article managed to create a concerned glance or two ? And I’m even happier it helped you feel inspired to go make something! I absolutely know the feeling of loving learning and creating and yet finding ways of self-sabotaging myself out of it. Let us know what you create!

I’ve been considering how to incorporate machine/AI generated text into a project, and your experiences have given me great insight on the nuts and bolts of working with neural networks. It has also helped me check my inflated expectations to something more doable. Loved the article and excited for that mongoose someday!

Haha, I’m also very excited for the mongoose! I’ve got some free time next month that I’m mentally blocking off for further experimentation with this… if anything actually good comes out of it then I’ll let you know 🙂 I’m sure there’s lots of better sources out there on the technical details but hopefully this journey showed you a _bit_ of the path for your own project 🙂 (even if it’s just what NOT to do)

Fascinating, I wonder where the heck the shings came from!

I haven’t looked into it too deeply but I suspect my network found a sequence it “liked” (i.e. a sequence it could predict very reliably, like “after an i comes an n, after an n comes a g, etc”) and wasn’t smart enough to see beyond it to the larger structure. Caught in a local minima, as they say.

I have used inferkit as an interface for AI text generation, and it can range anywhere from impressive to shings shings shings. The longer you let it run the weirder it gets. I’ve let it write some poetry (it write an Emily Dickinson emulation that worked pretty darn well). I also let it write some new meditations based off of Marcus Aurelius’s originals (@artificial_aurelius on Instagram). I played around with artbreeder for a little bit too.

That’s a very cool tip, thanks Ben! I’ll definitely check that out, along with your aurelius bot – which sounds extremely cool and also combines several of my interests at once 😀

Thanks for the article! A cool little project from last week: I run my own YouTube channel in Italian (my native language) and I have recorded some interviews in French (my second language). I decided to subtitle the videos in Italian to make them understandable by my Italian audience. I first had to correct the imperfect automatic french subtitles from Youtube. Then I translated them into Italian with the help of an automated tool + a loooot of corrections. This took me a couple of days in the end but was fun. I can see it now as a completed project, for once! Yes, it’s far from perfect, but done.

Yay! That sounds very satisfying, and it’s cool that you were able to automate some of the process 🙂 “Far from perfect, but done” is often way better!

That’s hilarious, Neil! What a cool project. Although it would have been fantastic if the AI had produced an understandable sentence, it still must be gratifying to know that a computer can’t totally replace you.

Well, THIS computer can’t! But maybe another one can ? I’m pleased you enjoyed it, thanks Karen 🙂

Oh my gosh, AI-generated text on puttylike? AKA my two favourite things combined? I fell in love with the topic about a year ago and decided to base my university dissertation on it without much deep thought despite having no technical background, so I kind of had to figure things out (but the pressure also made it less fun). ? Although the most recent hype is GPT-3, the previous model, GPT-2 is also capable of producing really nice stuff and it is publicly available and you can quite easily train it on your own data up to the medium size (355 million parameters, I believe). I am actually impressed that you trained your own network from scratch (and now I want to do it), but look into GPT-2 and you might be surprised how easy it is to get intelligible text to come out.

you know, I can’t believe it never occurred to me until your comment to look into the possibility of using GPT-2 instead of GPT-3! So obvious in hindsight..! I will definitely try that next time I have a free day to play around with this, thank you 🙂

This was a great read. I love doing projects like theese and it is so gratefying to make something that in the end kinda wokrs. If anyone want to play around with more machine learning from a beginner standpoint I can highly recommend ml5, friendly machine learning and Daniel Shiffmans YouTube channel codingtrain.

Yes, I love Daniel Shiffman! Such a great channel. I will definitely check out that other stuff, thank you 😀 glad you had fun with the article 🙂

Also, it’s worth checking out RunwayML if you want to get some computing power in the cloud. I think you get some for free to get started and you get easy access to some pretty awesome ml algorithms.